New Off-the-Shelf Datasets from Appen

Creating a high-quality dataset with the right degree of accuracy for training machine learning (ML) algorithms can be a difficult uplift for getting AI and ML projects off the ground. Not every company has a specialized team of ML PhDs, data engineers, and human annotators at their disposal. This is largely due to the expense of such a team. Instead, machine learning teams are turning to bespoke, off-the-shelf training datasets. These off-the-shelf training datasets offer a quick, cost-effective alternative, solving for both the cold-start problem as well as model improvement without the risks of collecting and annotating data from scratch. This is because these datasets that are high-quality can be used as-is or customized for specific project types.Finding datasets that have high accuracy labels can also be a difficult task. Many datasets out there may be old, uncleaned, or irrelevant. To help companies get their ML initiatives off the ground, Appen has made its entire catalog of off-the-shelf datasets available from its website. These datasets are comprised of high-quality training data to help companies ensure they know the accuracy they’re getting upfront, removing the variability out of the model training process. Users are now able to browse diverse speech and text datasets and request quotes for one or multiple datasets including:

- Fully transcribed speech datasets for broadcast, call center, in-car, and telephony applications

- Pronunciation lexicons, including both general and domain-specific (e.g. names, places, natural numbers)

- Part-of-speech-tagged lexicons and thesauri

- Text corpora notated for morphological information and named entities.

https://youtu.be/fVNEDzeRVeQ

Machine Learning Projects that Benefit from Off-the-Shelf Training Datasets

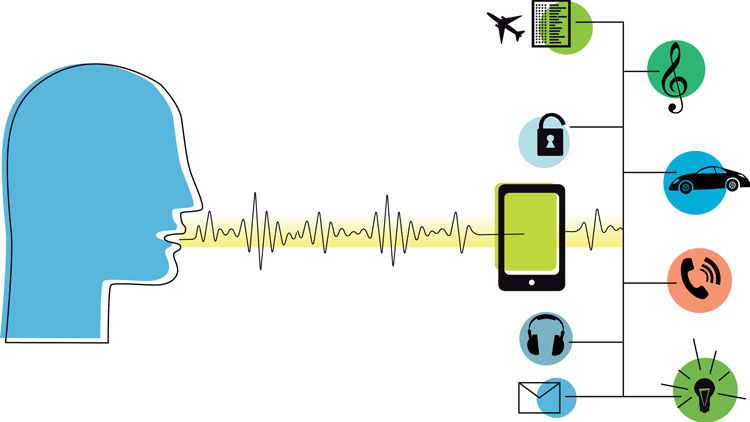

Cataloged by regional dialect and speaking style, Appen’s collection of over 230 high-quality datasets offers essential tools for companies to tap, including customizing AI offerings such as automatic speech recognition (ASR), text-to-speech (TTS), and more for their target markets. AI applications based on natural language processing (NLP) and conversational understanding require a high level of linguistic expertise in their development phase. Yet, this shouldn’t be overlooked as high-quality datasets that have been annotated with NLP in mind removes significant burdens for teams developing these projects. Typical use cases for Appen’s resource-saving datasets include automatic speech recognition, TTS projects, in-car human-machine interface, digital and virtual assistants, sentiment and emotion analysis, and machine translation.

Automatic Speech Recognition (ASR)

Accurate automatic speech recognition (ASR) systems are crucial for improving communication and convenience across a wide range of applications — from video and photo captioning, to identifying questionable content, to building more helpful AI assistive technologies. But, as we’ve mentioned, building highly accurate speech recognition models usually requires vast amounts of computing and annotation resources. The plot thickens when you consider not only the staggering number of languages around the globe but also dialects within those languages.

Text to Speech (TTS)

Similar challenges exist for TTS projects. This assistive technology can be highly effective for applications such as mobile phones, in-car systems, consumer medicine, and virtual assistants. These technologies all depend on TTS systems to function, and those systems need to be accurately trained with high-quality speech data to ensure accurate responses.

Machine Translation

Automatic translation, if highly accurate, can mean the difference between a good and bad customer experience. Building your machine translation engine with high-quality training data is crucial to achieving the kind of accuracy that users find helpful rather than frustrating. As you may have guessed, the key to creating a coherent and useful translation engine requires massive amounts of expertly annotated language data.These are just a few examples of projects that can benefit from Appen’s off-the-shelf datasets. Because the obstacles of time and money involved in creating datasets of your own have been removed, you can bring your natural language product to market faster and with confidence that your ML model has been trained with the highest level of quality available.

Why You Should Consider Off-the-Shelf Datasets

There are many reasons an off-the-shelf data set might be right for you. Not only is it advantageous from a price and speed perspective, but growing requirements for data privacy and security from both customers and authorities can make it complicated to use the data you have on hand. Companies need to be cautious when using data they have on hand as data that wasn’t cleared for ML/labeling may land them in the news for the wrong reasons. There is also a growing desire to reduce the level of bias in machine learning models, and utilizing off-the-shelf training datasets from a provider that implements responsible AI practices can help ensure your model is trained with diverse, high-quality data. This is particularly important for ASR systems where racial and ethic disparities are identified. Traditionally, pre-built datasets were focused on NLP. Today, they also include computer vision, particularly sensing and mobility applications (e.g., for 3D-sensing cameras, delivery drones, autonomous vehicles, robotics, etc.), as well as a need for broader image and video datasets. The growing availability of ready-made datasets stems from a shift in the overall training data demand to have more specific and complex use cases.See Appen’s growing list of off-the-shelf datasets here, or click to learn more about our custom AI training data solutions.