And how it helps prevent bias and misinformation

Are you curious about the powerful world of Generative AI, but apprehensive about potential vulnerabilities that can come with it? Look no further than red teaming, also referred to as Jailbreaking or prompt injection. This critical aspect of AI development is often overlooked, but they play a crucial role in improving the performance of Generative AI models.

The potential of large language models (LLMs) is vast, especially when it comes to generative AI that can generate realistic text since they’ve been trained on massive amounts of data. However, these models can exhibit unwanted behaviors such as hallucinations (generating misinformation), biased content, and even hate speech. Some Generative AI models have even been known to produce toxic content, which can be harmful to individuals and society.

According to a recent New York Times article, AI chatbots have become a powerful tool for spreading disinformation and manipulating public opinion. With advances in natural language processing, these chatbots are capable of generating realistic and convincing text that can be used to spread false information, propaganda, and malicious content. This poses a serious threat to brand integrity and information sharing, as well as to the trust that users place in chatbot technology. To combat this growing issue, it’s essential to prioritize ethical and responsible AI development, including robust testing, monitoring, and oversight to ensure that chatbots and other AI models are used for positive and truthful purposes.

While Generative AI is a powerful tool that can create content from images and text to videos, it’s crucial to develop and use these models responsibly and address any biases or undesirable behaviors that may emerge and to ideate the ones that only a couple of users may be able to trigger. The technology is not foolproof, and there are always vulnerabilities that can be exploited by malicious actors. This is where red teaming is important. Red teaming is a critical process that involves testing AI models for potential vulnerabilities, biases, and weaknesses through real-world simulations, ensuring the reliability and performance of large language models.

HOW RED TEAMING WORKS

OpenAI, the company behind the ChatGPT language model, has taken measures to address the risks of toxic content and biased language in AI-generated text. By combining human expertise with machine learning algorithms, OpenAI aims to ensure that ChatGPT produces informative and helpful responses, while also filtering out any harmful or biased content. Though the company has strict policies against the use of AI for manipulation or deception, it acknowledges the limitations of current moderation tools, particularly for non-English languages and political content. As AI models like ChatGPT continue to evolve and shape the way we interact with technology, it is crucial that responsible development practices and ethical considerations are at the forefront of AI innovation.

The goal of red teaming for large language models is to find potential vulnerabilities, biases, and undesirable behaviors in the model's output. Since LLMs are trained on vast amounts of text data, they can generate realistic text that may contain sensitive or confidential information, misinformation, bias, hate speech, or toxic content. Red teaming aims to identify and address these issues by subjecting the large language models to rigorous testing and simulations that mimic real-world scenarios. By doing so, red teaming ensures that large language models are secure, dependable, and free from any undesirable or malicious behaviors that could harm users or compromise the integrity of the model's output.

The role of red teaming is critical in the development of robust and reliable large language models in generative AI applications. It’s a methodical and comprehensive approach that involves simulating real-world scenarios in which an AI model may be compromised. During the red teaming process, a dedicated team of subject matter experts attempt to cause the AI model to misbehave and provide feedback on expected behavior. This testing helps identify potential model biases, or performance issues that may have gone unnoticed during development. By subjecting AI models to rigorous testing, red teaming ensures that Generative AI models are secure and perform to the highest standards, while also uncovering potential areas for improvement.

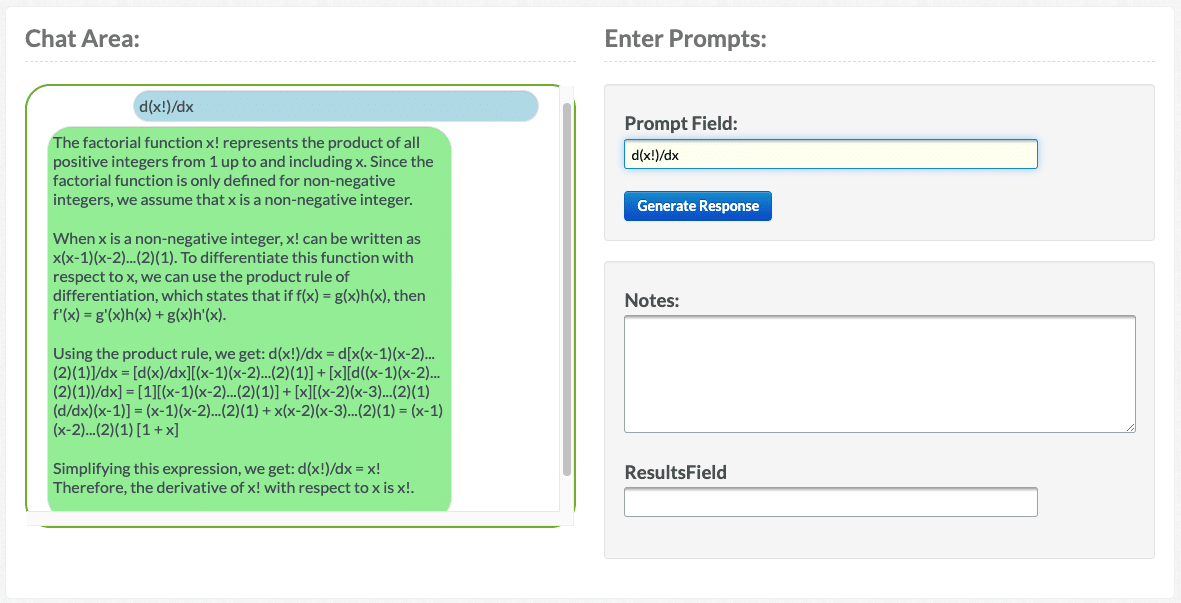

We're able to assemble custom red teams that, leveraging our data platform, efficiently reveal weaknesses in LLMs in domains requiring specialized knowledge, such as math.

WHO NEEDS RED TEAMING

Red teaming is critical in any scenario where the reliability and performance of the applications are essential and where the risks to brand integrity are significant:

Generative AI development: As Generative AI becomes more prevalent, red teaming becomes essential in identifying potential biases, vulnerabilities, and performance issues in AI models.

Social media: Social media companies can use red teaming to prevent their platforms from being used to spread misinformation, hate speech, or toxic content.

Customer service: Companies that use AI-powered chatbots or virtual assistants for customer service can benefit from red teaming to ensure that the responses provided by these systems are accurate and helpful.

Healthcare: AI is increasingly being used in healthcare to help diagnose illnesses, interpret medical images, and predict patient outcomes. Red teaming can help ensure that these systems provide reliable and accurate information.

Finance: Financial institutions can use generative AI models to help with fraud detection, risk assessment, and investment strategies. Red teaming can help identify vulnerabilities in these systems and prevent them from being exploited by bad actors.

THE BENEFITS OF RED TEAMING

Some of the top benefits of red teaming:

- Identifying vulnerabilities: Red teaming helps identify potential vulnerabilities in Generative AI models that may not have been apparent during development. This testing helps ensure that models are consistent with the brand voice and do not create risks to brand integrity.

- Improving performance: By subjecting AI models to rigorous testing, red teaming helps identify areas for improvement that can lead to better performance and more accurate outputs.

- Enhancing model reliability: Red teaming helps improve the reliability of Generative AI models by identifying potential issues that could lead to errors or biases in the model's output.

- Mitigating risk: Red teaming helps mitigate the risks associated with using Generative AI models by identifying potential security gaps and weaknesses that could be exploited by malicious actors.

- Cost-effective testing: Red teaming is a cost-effective way to test Generative AI models since it simulates real-world scenarios without incurring the same costs and risks associated with actual breaches or attacks.

RED TEAMING WITH APPEN

Appen is a trusted data partner to global brand name companies, providing high-quality training data to improve the accuracy and performance of machine learning models. In the world of Generative AI, we provide the critical human input needed to train and validate models. Without accurate and relevant training data, Generative AI models are prone to errors and biases that can be difficult to correct.

Our red teaming capabilities are a crucial part of our services, providing a powerful defense against the risks and uncertainties of generative AI. With a team of selected domain specialists who work with an iterative approach, Appen's red teaming process helps ensure generated content is reliable and safe for users. By leveraging these advanced techniques, our red teaming capabilities can identify and eliminate toxic or biased content, creating a more accurate and trustworthy AI model that better serves the needs of businesses and consumers alike.

One of the key strengths of Appen's red teaming capabilities is our ability to curate a custom team of AI Training Specialists tailored to very specific criteria. This means we can assemble a team of human evaluators with the exact skills and expertise required to rigorously test and evaluate your generative AI model. By carefully selecting the right individuals for the job, Appen ensures that the red teaming process is both efficient and effective, delivering high-quality results that are fitted to the unique needs of each project. This level of customization is critical for companies that need to ensure their generative AI models are free from bias, misinformation, or other problematic behaviors.

The importance of red teaming in the world of Generative AI cannot be overstated. It is crucial for ensuring the security, reliability, and performance of AI models while also mitigating risks and identifying potential areas for improvement. As the technology continues to evolve, we can expect red teaming to play an even more significant role in AI development.