Rapid-Sprint LLM Evaluation & A/B Testing in Multiple Complex Domains

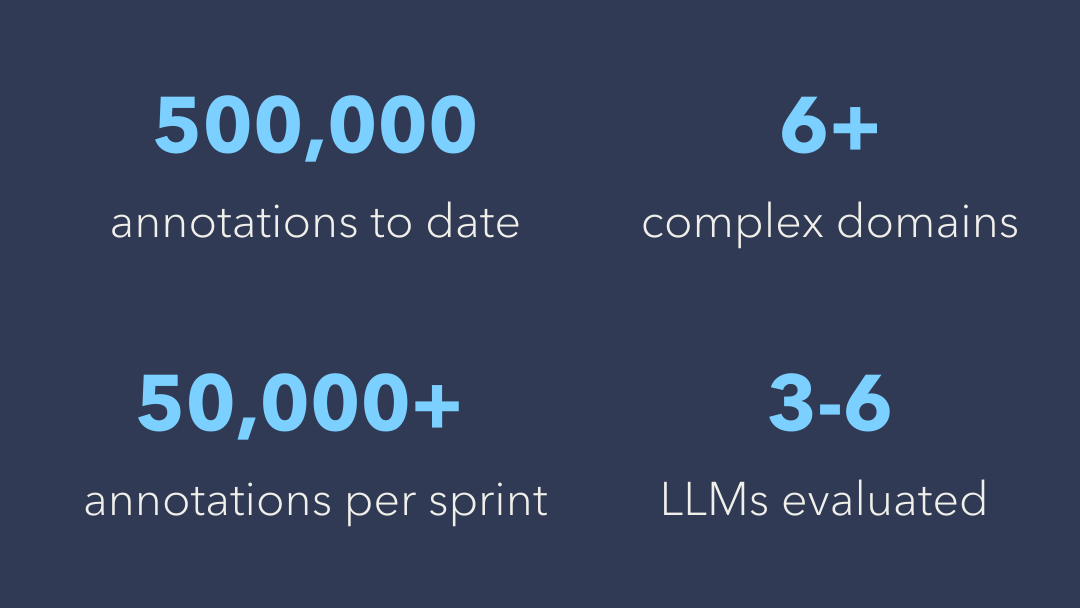

500,000

annotations to date

6+

complex domains

50,000+

annotations per sprint

3-6

LLMs evaluated

Introduction

A leading model builder partnered with Appen to conduct rapid-sprint evaluations across 3-6 large language models (LLMs) for tasks spanning both general and complex domains, including healthcare, legal, finance, programming, math, and automotive. By leveraging Appen’s team of expert evaluators and the AI data platform (ADAP), the project delivered over 500,000 annotations in 5-day sprints of 50,000+ annotations each, ensuring rapid iteration and continuous improvement. These evaluations benchmarked model accuracy, relevance, and adherence to Responsible AI standards.

Goal

The primary objective of this project was to assess and improve the performance of multiple LLMs across diverse industries. By conducting structured evaluations and A/B testing, the project aimed to provide precise insights into model effectiveness, ensuring alignment with industry-specific requirements and Responsible AI principles.

Challenge

Managing rapid-sprint evaluations across multiple LLMs and domains presented several key challenges:

- Domain-Specific Complexity: Ensuring that evaluations accurately reflected the nuances of each subject area such as legal, healthcare, financial, programming, math, and automotive language.

- High-Volume Annotations: Delivering over 500,000 annotations while maintaining a high standard of consistency and AI data quality across various model outputs.

- Comparative Model Assessment: Evaluating 3-6 different LLMs side by side required a structured and scalable methodology.

- Responsible AI Standards: Ensuring that models met ethical AI guidelines, including bias mitigation and transparency.

- Tight Timeframes: Delivering 50,000 annotations every 5-day sprint required efficient processes and expert coordination.

Solution

To tackle these challenges, Appen employed a structured evaluation framework:

- Recruit Expert Evaluators - Appen engaged subject-matter experts with specialties in healthcare, legal, finance, programming, and other complex industries to ensure high-quality evaluations tailored to industry-specific standards.

- Structured A/B Testing Process - Evaluators assessed model responses across multiple domains, ranking outputs based on accuracy, relevance, fluency, and alignment with ethical AI principles.

- Data Management & Quality Control - The project utilized Appen’s AI data platform (ADAP) to streamline workflow execution, manage large-scale AI data annotation, and ensure quality through

- Benchmarking & Insights Delivery - Comparative performance insights were generated to inform model refinement, guiding improvements in response quality and domain adaptability.

Results

The rapid-sprint evaluation and A/B testing framework provided the model builder with actionable insights to optimize LLM performance across multiple domains. Key achievements include:

- 500,000+ annotations to benchmark model accuracy, relevance, and Responsible AI compliance.

- Evaluation across 3-6 LLMs, providing comparative insights for model refinement.

- 50,000+ annotations per 5-day sprint, ensuring rapid iteration and continuous improvement.

- Expansion into supervised fine-tuning and red teaming, leveraging evaluation insights to enhance model robustness and adaptability.

- Improved domain-specific model accuracy through structured human feedback.

By leveraging expert evaluations, scalable A/B testing, and AI-driven workflow management, Appen empowered the client to enhance LLM performance across diverse industries, ensuring alignment with both business needs and Responsible AI principles.